iOS Voice-Driven Interfaces: What They Are & Why They Matter

Summary:

iOS voice-driven interfaces are redefining how users interact with their devices. This blog explores what iOS voice-driven interfaces are, how they function, and why they are becoming a critical component of modern app experiences.

From hands-free navigation to accessibility improvements and seamless user engagement, voice-driven technology is no longer just a trend—it’s a strategic advantage. Whether you’re a developer, designer, or product strategist, understanding the value of iOS voice-driven interfaces can help you create smarter, more intuitive applications.

Introduction

Technology feels different every year, but right now, voice control is stealing the spotlight. People no longer reach for screens first; they simply ask a question or issue a command. Apple watchers have noticed that the iOS world- HomePods, iPhones, and even Siri Shortcuts changing user habits more than most folks realize. Siri upgrades and new voice-accessibility settings keep rolling out whenever a software update chimes in.

Voice assistants are popping up all over. Juniper Research says the global total passed a whopping 8 billion in 2024, and experts expect that count will climb even higher in 2025 and after. Inside the Apple universe, Siri alone is chewing through more than 25 billion requests every month, a sign the tech has found its way into the daily habits of most iPhone users.

What Are iOS Voice-Driven Interfaces?

iOS voice-driven interfaces refer to technologies and design patterns that enable users to control iPhone and iPad apps using commands. Apple’s voice assistant, Siri, powers these interfaces largely together with tools such as SiriKit as well as the Speech framework.

This is where apps are given the ability to respond to users’ commands, transcribe speech into text, and initiate in-app actions with no need for physical inputs. In addition to improved accessibility, this feature makes it possible for users to have a faster understanding of various functionalities across different applications, including messaging, navigation, productivity, and health tracking.

Voice is gaining popularity as the most preferred means of communication. For that reason, organizations are now incorporating voice features into their mobile strategies. To get it right, developers must be well-versed in Apple frameworks, user intent modeling, and natural language processing, which fall within the realm of modern iOS app development.

Whether you’re creating a business solution or a consumer app, integrating voice requirements will set your product apart and align it with the future trends in mobile interaction.

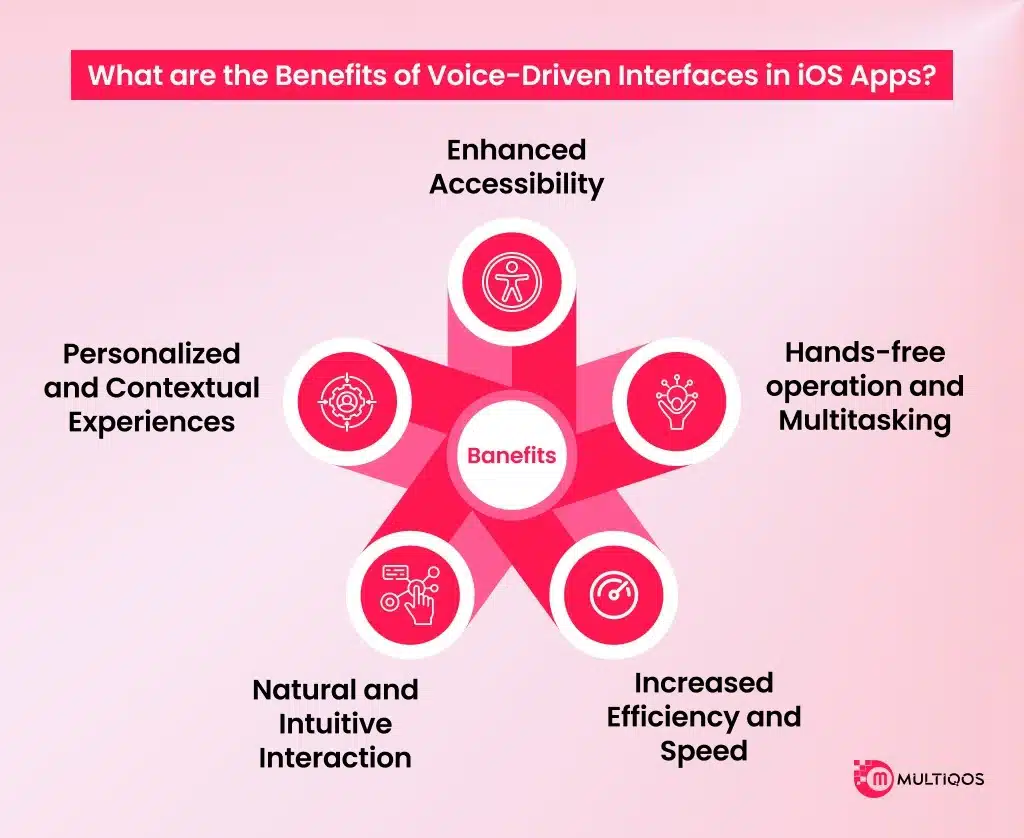

What are the Benefits of Voice-Driven Interfaces in iOS Apps?

Voice assistants have finally stopped feeling like sci-fi and started showing up in almost every iOS app you can name. Talking to the screen is now a faster, breezier way to get things done. Here are the five advantages you need to know:

1. Enhanced Accessibility

Few improvements could be labeled as truly game-changing, yet voice-first design, by many accounts, is one of them. People who are blind or have limited hand mobility often find ordinary touch screens stubbornly uncooperative, and spoken commands transform that frustration into something manageable.

Software such as Apple Voice Control can go beyond basic taps to let a user scroll, type, pinch, or doodle a signature with nothing but sound cues for guidance- and that small miracle opens iPhones and iPads to millions more hands.

2. Hands-free operation and Multitasking

Voice commands are vital since they enable users to use apps without using their hands. Such an ability is necessary when one is, for instance, exercising, cooking, driving or simply carrying his/her groceries. A user can set a timer, check the weather forecast, send a message, or control music without touching the device, which makes it very convenient and safe.

3. Increased Efficiency and Speed

A quick voice command often beats the clatter of a keyboard or the slow crawl through menus. When the software listens instead of waiting, whole workflows tidy themselves up in seconds. Try dictating an email or barking out a search term, usually clocking a hefty time gain over thumb-typing.

4. Natural and Intuitive Interaction

Voice, our most natural communication device, is characterized by simplicity. To make software seem more natural and conversational, voice-driven interfaces employ natural language processing technology. This lowers the barrier of entry for new users and makes technology accessible to people of all ages.

5. Personalized and Contextual Experiences

Voice interfaces have evolved due to AI and machine learning. They are now able to understand the user’s interactions and respond with more contextually appropriate answers. An example is when a voice assistant recalls users’ choices, predicts their requirements, and makes suggestions that are customized, thereby making the app experience more personalized and useful.

Conclusion

iOS now lets your voice do the talking, and that simple twist is changing how we all tap, swipe, and scroll. With Siri baked right in, plus a growing list of app-specific voice shortcuts, what felt like science fiction a few years ago is just plain normal.

Give the trend a serious look; adding voice controls could push your app ahead of the pack and leave users smiling. If you want to create voice-based services that work well within the Apple environment, then you need to hire iOS app developers who are well-versed in voice communication, natural language processing, and Apple’s development frameworks.

Having remarkable skills will ensure your application not only seems modern but is also user-friendly and can be used for a long time in the future.

FAQs

Voice assistants make it easy for almost anyone to use an app, even if they can’t see the screen. Because you talk instead of typing, things get done faster, and mistakes drop way off. Different folks-whatever their skill level-go hands-free and still feel in control.

Plenty of iOS apps can use voice power from Apple’s APIs. Builders usually tap SiriKit or grab Voice Control APIs. Even so, adding speech features takes careful planning and lots of testing to keep everything running smoothly.

Built the right way, voice-controlled tech can actually be pretty safe. Take Apple, for instance. The company slaps on tough privacy rules, and developers love them or hate them- often bolt on extras like fingerprint or Face ID checks whenever the stakes are high.

Industries such as healthcare, shipping, smart homes, online shopping, and daily productivity apps all win by making voice the main command.

Get In Touch