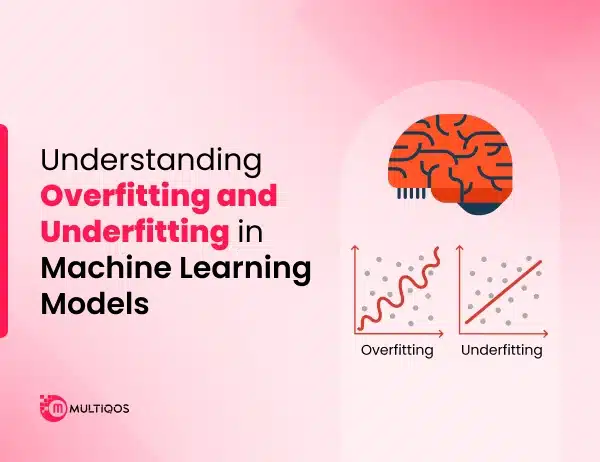

Understanding Overfitting and Underfitting in Machine Learning Models

Summary:

Overfitting and underfitting in machine learning are two fundamental issues that can significantly affect the performance and reliability of predictive models. Overfitting occurs when a model learns the training data too well, capturing noise and outliers rather than general patterns, leading to poor performance on unseen data.

On the other hand, underfitting happens when a model is too simplistic to grasp the underlying structure of the data, resulting in inaccurate predictions even on training data. Both scenarios highlight the importance of model generalization—the ability of a machine learning model to perform well on new, unseen datasets.

A deep understanding of overfitting and underfitting in machine learning not only helps improve model accuracy but also contributes to building systems that are more scalable, adaptable, and impactful across real-world use cases.

Introduction

Ever built a machine learning model that performs perfectly during training, but completely misses the mark when tested on new data? It’s frustrating, and usually comes down to one of two things: overfitting or underfitting.

This usually boils down to two problems: overfitting or underfitting. They may sound technical, but the idea is simple. Overfitting means that the model is trying hard to memorize the training data, including all small details that really do not matter. During Underfitting? When it doesn’t try enough, it misses the pattern completely.

Understanding both is key if you want your model to actually work in the real world. In this post, we’ll explain what each one really means, why they occur, and what you can do to handle them.

What is Overfitting and Underfitting in Machine Learning?

In machine learning, model accuracy is not just about obtaining the right answer during training – it is about providing accurate predictions of new, unveiled data, and this is the place where overfitting and underfitting in machine learning come into play.

Overfitting occurs when a model learns training data well, including random noise and fewer details. As a result, it works well during training but is poor on test data because it lacks generalization.

On the other hand, underfitting occurs when a model is so easy to understand the pattern in the data. It performs poorly in both training and testing of datasets, as it has not learned enough.

Both are common problems that developers encounter when they build models, and know how to avoid them, especially with the increase of more complex algorithms in today’s top machine learning trends.

Bias and Variance in Machine Learning

Bias and variance are the two main concepts that affect how well the machine-learning model works-specifically when it comes to normalizing new data.

- Bias, refers to errors that come from highly simplified assumptions in the learning algorithm. A high-bias model adds very little attention to training data and remembers the pattern in question. This often leads to underfitting, where the model performs poorly on both training and test data.

- Variance, on the other hand, is the model’s sensitivity to small fluctuations in the training set. A high-variance model reacts a lot to training data and catching noise as it is a real pattern. This is usually the result of overfitting, where the model performs well on training data, but poorly on new or unseen data.

Finding the correct balance between bias and variance is known as the variance tradeoff. It is one of the most important parts of building a reliable and effective ML model. Too much leads to errors; the goal is to find a sweet spot where the model is complicated enough to learn the data, but not so complex that it misses it.

Key Differences Between Overfitting and Underfitting in Machine Learning Models

Here are the significant differences between overfitting and underfitting in machine learning. Check out how they affect the model performance.

| Aspect | Overfitting | Underfitting |

| Definition | The model learns the training data too well, including noise and outliers | The model is too simple to capture the underlying structure of the data |

| Model Complexity | Too complex | Too simple |

| Training Accuracy | High | Low |

| Validation/Test Accuracy | Low (poor generalization) | Low (poor learning) |

| Bias | Low bias | High bias |

| Variance | High variance | Low variance |

| Cause | Too many features, too long training, not enough regularization | Model is not trained enough, or lacks complexity |

| Example Scenario | A deep neural network memorizes every training point | A linear model trying to fit non-linear data |

| Detection | Large gap between training and validation performance | Both training and validation errors are high |

| Solution | Regularization, pruning, simpler model, more data | Add features, increase model complexity, and train longer |

How to Detect Overfitting and Underfitting in Machine Learning?

To find out if a model is overfitting or underfitting usually comes down to seeing how it performs on training versus verification or test data.

How to Reduce Underfitting?

If your model is underfitting, it means it doesn’t learn enough of the data. There are some ways to fix it, such as:

- Use a more complex model: Try switching to a model that can handle more complexity.

- Increase training time: When it comes to learning effectively, some models might require multiple epochs or iterations

- Reduce Regularization: If you use L1 or L2 regularization, you can limit the model too much.

- Add more relevant features: More informative input models can help capture the catch pattern better.

- Improve functional technique: Changing existing features or creating new ones often helps the model learn more than data.

How to Reduce Overfitting?

If your model performs very well on training data but poorly on test data or validation, it is overfitting. How to address:

- Use Regularization: Use L1 (Lasso) or L2 (Ridge) Regularization to punish very complex models.

- Simple model: Reduce the number of layers, nodes, or parameters.

- Prune Decision Three: For tree-based models, pruning can remove unnecessary branches to help make a better normal.

- Use dropout (for neural networks): This leaves some compounds randomly during exercise to prevent dependence on specific routes.

- Collect more training data: Helps multiple data models see a wide range of examples and reduces the possibility of remembering the noise.

- Use Cross Validation: Technology such as k-fold cross-validation ensures that the model works continuously across different datasets.

Conclusion

After reading this post, you must understand how important it is to keep the balance between overfitting and underfitting in machine learning when it comes to creating reliable and accurate models. Overfitting often leads to models that perform well on training data, but struggle with the actual landscape, while reducing performance in models that are unable to capture significant patterns from the beginning.

For teams working on AI-operated solutions, partnering with a leading machine learning development company provides valuable guidance and technical expertise. Using the right techniques in the right development phase can help you create models that normalize and provide meaningful results. But let’s say you want to scale your in-house team, it is significant to hire ML developers who are not only skilled in model building but also experienced in detecting and fixing these general problems.

FAQs

There are a few reasons why underfitting might happen. Maybe the model isn’t complex enough. Maybe it didn’t train long enough.

A common sign is when your model performs really well on training data but does much worse on validation or test data. That gap is usually a giveaway.

There are several things you can try:

- Use simpler models

- Add more training data if you can

- Use techniques like regularization or dropout

- Stop training early if the model starts to get worse on validation data

You might want to:

- Use a more complex model

- Train it for longer

- Choose better features

- Reduce any regularization that might be holding it back

Get In Touch