Why Are Golang and LLM a Powerful Duo for Modern Development?

Summary:

We’ll explore how Golang and LLM (Large Language Models) together enable developers to build smarter, faster, and more scalable software solutions. Golang’s strengths—such as concurrency, speed, and simplicity—make it a top choice for serving and integrating LLMs in real-world applications.

Whether you’re building intelligent chat interfaces, automating document workflows, or scaling microservices that rely on language understanding, combining Golang and LLM creates a high-performance development stack. This post covers everything you need to know and why this duo is becoming increasingly popular among modern development teams.

Introduction

Golang and LLM within the tech industry are a relatively new phenomenon, attracting increasing attention recently due to it’s unique advantages in machine learning and language models. While emerging technologies are optimizing processes automatically, developers are working continuously to find frameworks and programming languages that offer greater capabilities while delivering speed and accuracy. Go is famous for its straightforward structure, concurrency, and ecosystem resource optimization, making it easier for companies to adopt for scaling these systems.

In this article, we will discuss the empowering features that Golang and LLM bring to software engineering, their joint advantages, and the primary reasons why this stack is ideal for practical implementations.

Why Choose Golang for LLM Development?

Check out the best aspects of Golang for building powerful and efficient LLM applications. Starting from speed and ending with scalability, these are the primary reasons to opt for a Golang LLM framework.

1. High Performance & Efficiency

Golang is notably recognized for its compilation and the lightweight concurrency primitives known as goroutines. This enables extremely rapid large language model (LLM) applications in Go, especially for real-time inference, API services, and highly parallel activities such as prompt routing or vector similarity search.

2. Robust Concurrency Support

Parallel processing of multiple LLM requests is done effortlessly using Golang’s built-in concurrency features. This is great for applications such as chatbots, multi-agent systems, or for streaming LLM outputs without stalling performance.

3. Minimal Memory Footprint

The resource efficiency of Go applications is beneficial when embedding LLMs, embeddings, or inference engines within edge devices or in memory-constrained environments.

4. Scalable & Maintainable Architecture

Golang development promotes modular, clean, and scalable design patterns. Applications developed in Go leverage microservice-friendly architecture, facilitating horizontal scalability of LLM deployments in cloud-native ecosystems.

5. Rich Ecosystem & LLM Integrations

Recently launched frameworks like LLamaIndex-Go or GoLangChain have started incorporating functionalities from OpenAI, Cohere, Hugging Face, and vector databases such as Pinecone and Weaviate. These integrations allow for the rapid development and deployment of tailor-made applications powered by LLMs.

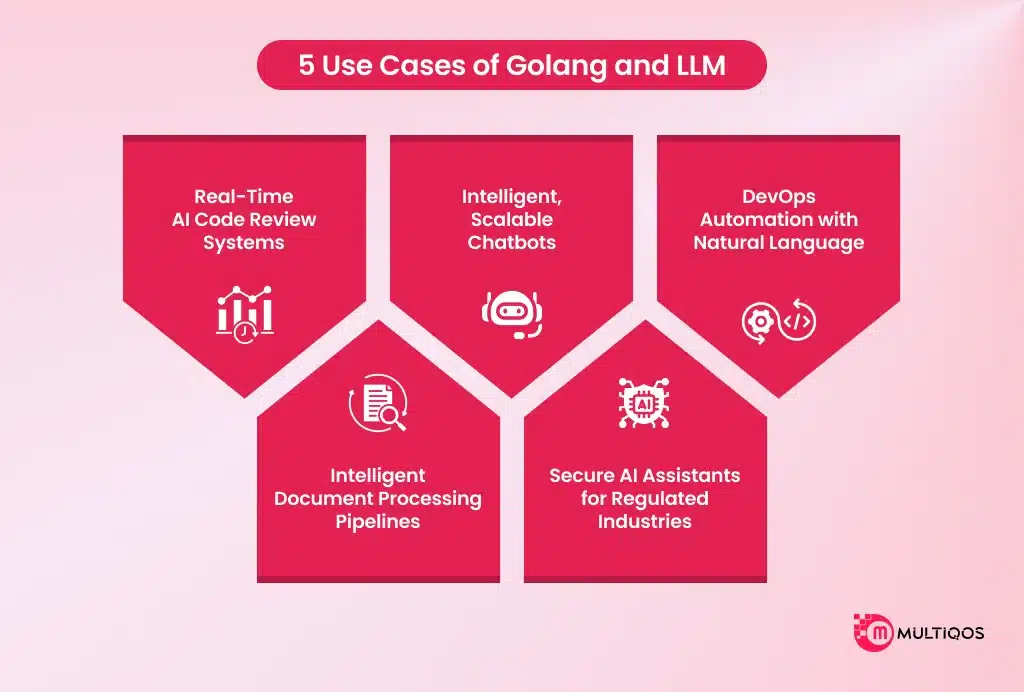

5 Use Cases of Golang and LLM

Combine Golang’s rugged speed on the server with a chatty Large Language Model and, suddenly, your software can think fast and behave intelligently in the same breath. That alchemy gives rise to compact, responsive services that remember context yet don’t buckle under traffic. People in healthcare, finance, e-commerce, and beyond are reaching for that blend right now.

1. Real-Time AI Code Review Systems

Many teams now lean on Golang’s raw speed and built-in concurrency to wire up services that watch their Git repos day and night. A lightweight, embedded LLM then scans each commit, sniffs out bugs, recommends tweaks, and offers a plain-language summary in the blink of an second.

Example: A Go microservice sits in line with our CI, keeps one ear open for GitHub webhooks, and feeds any new code diffs into an LLM. A few seconds later-depends on the queue bot slides polished feedback back into the pipeline for the developer to catch before merging.

2. Intelligent, Scalable Chatbots

Golang goroutines and infrastructure swallow high concurrency the way a kettle swallows water, so servers stay cool even under a crush of users. Drop a tuned LLM into the same pipeline and you get a bot that fires back answers almost before the browser blinks, yet still remembers the last three questions.

Example: A messaging API written in Go shuttles a customer question straight to an LLM, drags in the latest product details, and fires back a useful reply before you can check your watch.

3. DevOps Automation with Natural Language

When you pair Go’s native cloud tools with a good language model, developers can talk to their infrastructure almost the way they talk to a colleague. The runtime keeps commands safe and plugs into every provider, and the model listens to plain speech, builds the needed bash or YAML on the fly, and even turns messy error texts into English that makes sense at midnight.

Example: Some one-line wisdom pops up in the terminal: Scale my Kubernetes cluster to 5 nodes. A lightweight Go interpreter glued to the back end reads the request, fires off the scaling commands, and before you can blink, the node controller is already standing up fresh instances.

4. Intelligent Document Processing Pipelines

Golang can quickly read and write files and tidy goroutines meet. That lets teams ship huge documents or near-real-time streams without leaning on bulky frameworks. Lawyers, bankers, and clinicians all draft wordy contracts or patient notes, so an engine that parses while the upload is still running feels like magic.

Example: A lightweight Go app pulls in PDF files, slices the text into bite-sized pieces, and fires those morsels off to a language model. In just a few heartbeats the service spits back tidy summaries and neatly tagged classifications.

5. Secure AI Assistants for Regulated Industries

Finance and healthcare don’t forgive blunders, so any software aimed at them must be fast yet unyieldingly secure. Go keeps memory leaks at bay and runs with the kind of reliability that builds trust, and on top of that language jump in to parse numbers, draft reports, churn through policy checks, and still finish on time.

Example: A Go service locked down to HIPAA specs manages the encryption, hands out tokens, and otherwise controls who sees what. Meanwhile, a large language model quietly churns through clinical questions and spits out patient overviews, all drawn from the protected store.

Conclusion

The use of applications that are intelligent and high-performing is rapidly increasing. It is no wonder that Golang with LLM is the go-to choice for modern development teams. Its combination provides unparalleled speed, concurrency, and scalability. LLMs enhance the functionality with features like reasoning and language comprehension. All of this makes building responsive, intelligent, and production-grade software easier.

Looking to combine the aforementioned benefits into your next project? Start by bringing the right talent on board. Smart backend systems, real-time chatbots, data-rich applications, and many more—there is no better time to hire Golang developers who can expertly integrate and optimize LLMs.

FAQs

Indeed, the integration of Golang with LLM APIs can be done with relative ease through the use of HTTP clients and even some third-party SDKs, allowing for seamless communication between an application and language models.

Absolutely. The language’s low latency and heavy concurrency support make it perfect for real-time LLM-based systems and automated workflows.

Though it lacks as many built-in LLM libraries as Python does, Golang boasts many client SDKs and other tools that enable developers to interact with LLM services, including OpenAI and Cohere, as well as with APIs for local models.

Hiring skilled developers experienced with Go’s architecture, performance tuning, and API integrations is essential to taking full advantage of Golang for LLM’s capabilities. Maintaining robust application performance and scalability is possible with the employment of seasoned Golang developers.

Widespread applications include smart chatbots, document summarizers, customer service automation, intelligent content workflows, and auto-completed text—all of which are built with Golang backends and integrated with LLM technologies.

Get In Touch